Overview

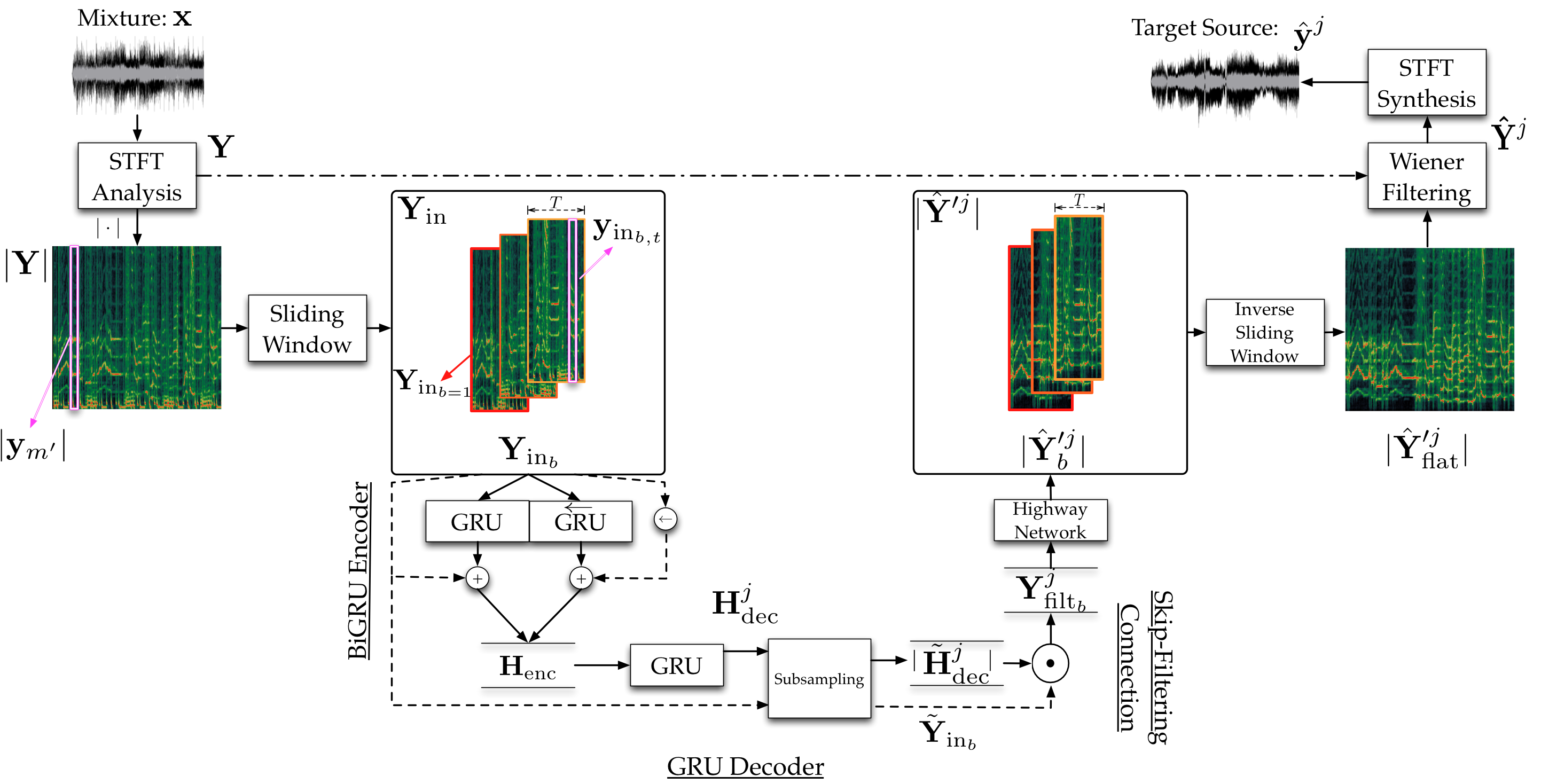

This webpage serves as a demonstration for our work in singing voice source separation via gated recurrent units (GRU) and skip-filtering connections. We propose a method to directly learn time-frequency masks from observed mixture magnitude spectra.

An illustration of our proposed method.

Comparison with Previous Approaches:

- Two deep feed-forward neural networks for predicting both binary and soft time-frequency masks, denoted as GRA2 and GRA3 [2].

- A deep convolutional encoder-decoder approach, for estimating a soft time-frequency mask and denoted as CHA [3].

- Two denoising auto-encoders operating on the common fate signal representation [4] denoted as STO1, STO2 .

Our proposed methods are :

- GRU-S, GRU-D, and GRU-DWF.

Audio Demo on Evaluation subset:

Audio Demo on Commercial Music Mixtures:

Acknowledgements:

We would like to thank the authors of [1] for making the results of the evaluation available. The major part of the research leading to these results has received funding from the European Union'ss H2020 Framework Programme (H2020- MSCA-ITN-2014) under grant agreement no 642685 MacSeNet. Konstantinos Drossos was partially funded from the European Union's H2020 Framework Programme through ERC Grant Agreement 637422 EVERYSOUND. Minor part of the computations leading to these results were performed on a TITAN-X GPU donated by NVIDIA.References:

[1] A. Liutkus, F.-R. Stoter, Z. Rafii, D. Kitamura, B. Rivet, N. Ito, N. Ono, and J. Fontecave, ``The 2016 signal separation evaluation campaign,'' in Latent Variable Analysis and Signal Separation: 13th International Conference, LVA/ICA 2017, 2017 pp. 323-332.

[2] E.-M. Grais, G. Roma, A.J.R. Simpson, and M.-D. Plumbley, ``Single-channel audio source separation using deep neural network ensembles,'' in Audio Engineering Society Convention 140, May 2016.

[3] P. Chandna, M. Miron, J. Janer, and E. Gomez, ``Monoaural audio source separation using deep convolutional neural networks,'' in Latent Variable Analysis and Signal Separation: 13th International Conference, LVA/ICA 2017, 2017, pp. 258-266.

[4] F.-R. Stoter, A. Liutkus, R. Badeau, B. Edler, and P. Magron, ``Common fate model for unison source separation,'' in International Conference on Acoustics, Speech and Signal Processing (ICASSP 2016), 2016, pp. 126-130.